Many people (home labbers, tech enthusiasts, small business, and enterprises) may want to run various services or web pages that they want to be available to the internet and not just on their home or business network. While there are various methods to accomplish this, a very popular choice for running various services is to use Docker containers. Docker is a great program that allows for running programs and services individually or in “stacks”. It generally makes very efficient use of system resources and can be run on systems with very low resources. Each container (if so designed) will expose port(s) so that its services can be available for access.

In order for people on the internet to access these ports (which are typically behind a firewall or router, some people will use “port forwarding” to connect inbound web traffic directly to the designated ports on the docker host within their LAN. And while this is an acceptable practice, it’s generally not recommended for various security reasons. Using port forwarding also comes with some limitations. For instance, if you only have one WAN ip address, but you want to run two or more services on the typical web ports (80 or 443), you can only route to one of them. One alternative to using port forwarding is to use tunneling technology like CloudFlare tunnels or something similar. This is generally considered safer than port forwarding ports on the firewall but comes with its own limitations and possible privacy concerns. (I may do an article on CloudFlare Tunnels at a later time)

But some people either don’t have the extra hardware to act as a server, or perhaps running a server on their home network violates their ISP’s policy, or they have concerns about uptime and reliability, or they just don’t feel comfortable exposing their home or business network to the internet, or etc…. In cases where running the service on the local LAN is just not an option or desired, a leased VPS (Virtual Private Server) may be the best option. And this is the option that I am discussing here today.

As a System’s Engineer by day and a tech enthusiast by night, I personally use 3 different VPS Providers. (Linode, Hostinger, and RackNerd). These are all great services and each comes with their own Pros and Cons. While my personal favorite is Linode (Akamai), I choose different providers for different things. For me, which provider I choose mostly comes down to VPS resources (CPU, RAM, DISK space, and monthly bandwidth) needed for a particular project. In addition to those items, I also need to consider if a project will need backups, snapshots, and our topic today – a separate firewall. Each one of these provider’s pricing on these things can differ somewhat. In addition sometimes they run specials which may be a better fit for a particular project at the time I need it. So I basically choose which provider I will use based on that criteria.

Almost all of my projects are built on Docker running on a Linux VPS. Docker is a powerful tool for deploying services quickly, but running it on a VPS directly exposed to the internet introduces significant risks. So it’s always best practice to run your VPS behind a separate firewall. While many Linux based distros come with iptables built-in, which can be managed by UFW (Uncomplicated Firewall), Docker has a bad habit of bypassing firewalls that live on the docker host (like UFW) by directly modifying iptables. This behavior can expose internal container ports to the internet. And the bad part is, ufw will report the ports as closed. However, if you try to reach those “closed” ports that the containers are using from the internet, you will quickly find out that they are quite reachable.

Linode provides an excellent firewall for their VPS’s as does Hostinger. But, one day some time ago, I had a project in mind that required a machine with a bit more resources than I usually use. And as my personal funds were running low, I looked around to see if I could find another provider that could give me the resources I needed at a price I could afford. This project was more for fun and I could not justify too much added expense for it. So I stumbled across a really nice deal on RackNerd. It was in November 2023 and RackNerd was running their “11.11” special where I was able to snag a 3-Core, 4GB RAM, 50GB disk, and 6TB monthly bandwidth machine for ~$37/year. Yep you read that right. Thats per YEAR! And it renews at that price as well. This deal seemed PERFECT to me so I jumped on it. (That deal is gone as of this writing but now they have their new 2025 Sale which is even better – See that deal here while it lasts) My point here is, they always have some specials on their KVM VPS systems. You just need to catch them at the right time.

As I started to setup my VPS, I quickly found out that RackNerd does not offer a separate firewall like my other providers do. Uh Oh! This project, like so many others, was going to be built on Docker and I knew that having no firewall in front of my VPS, was a non-starter. So I had to either find a solution to this problem or waste my money. So the search began. I found a few options including altering the way Docker interacts with iptables. One person had written a script/program to alter iptables behavior and a few others. But none of these ideas appealed to me because they each had side effects that I did not want to deal with. So the search continued. Then I came across some info about how to change a few things in the containers themselves and I was intrigued. So I decided to try some things on some test containers to see how things would work. And I got it working. Now I am not a Docker expert by any means, but since the process I followed worked for me, I thought I would document it and share it here on 502tech.com for others to use or tinker with.

In this guide I will walk you through the steps I took to secure Docker on an exposed VPS by combining Nginx Proxy Manager (NPM) for a reverse proxy, free SSL encryption certs from Let’s Encrypt, and some strategic networking configurations in the docker containers, to prevent unnecessary port exposure to the web. In my test case, I chose a few containers to demonstrate how this works. I used Draw.io, Whoogle, and Portainer CE for the tests. And dont forget, NPM itself also runs in a container.

Before we begin, please read the notes and assumptions below.

NOTE1: This solution is not perfect and may not work for all situations. This guide is simply intended as a demonstration only. While I have tried to cover every little step in these instructions, there may be bits and pieces missing.

NOTE2: NGINX Proxy Manager is a great reverse proxy. The one flaw I see in it is that, by default its admin interface is run on http port 81 without SSL. Personally I like to solve this issue by blocking access to this port at the firewall and then installing a CloudFlare Tunnel and utilize CloudFlare’s excellent Zero Trust features to access it. I tend to do this way even with installs that have a traditional firewall in front of them. Alternatively, one could also initially login on http port 81 and setup a proxy host to access the NGINX UI itself over port 443 and with an SSL cert. Then remove the port 81 access the way we do with the other containers. As the instructions below are just for demonstration purposes, I don’t delve too deeply into this subject but I think you should be aware of it..

NOTE3: If you’re already familiar with VPS’s, CloudFlare, Docker, reverse proxies, container management etc, I created a TL;DR section at the bottom of this article so you can skip right to the nuts and bolts of what is being done differently.

With all of that in mind, lets get started… The following instructions assume the following

PREREQUISITES / ASSUMPTIONS:

1) You have already purchased a VPS somewhere to perform these steps on.

2) You have the base VPS setup using Ubuntu Server 22.04 or newer. (This may work on other O/S’s, but steps may differ)

3) You can access the newly created VPS over SSH either as root or a user with full sudo privileges. Make sure you are using VERY strong passwords that are 24+ random characters for your SSH passwords. Or even better, consider using SSH keys and disabling passwords for SSH altogether. I cannot emphasize this enough.

4) The VPS is not behind a vendor provided firewall (or the firewall is disabled)

5) An active CloudFlare account. (A free account works totally fine for this)

6) Your own top level domain that is using CloudFlare’s Name Servers. You can buy a domain from Cloudflare as well if you need to and they offer very reasonable prices for domains as well as multi-year purchases.

7) You have added “proxied” DNS “A” records for each of the subdomains we are working with (npm, portainer, drawio, and whoogle) and have pointed them to the IP address of your VPS server.

Initial Setup Server Setup – Preparing the server

The steps in this section should be performed as either the root user, or another user that is a member of the “sudo” group.

Update and Upgrade the VM

apt update && apt upgrade -yAfter your server has been updated, I highly recommend rebooting it before proceeding.

Setup UFW Firewall

Set the default rules to deny incoming connections and allow outgoing connections:

sudo ufw default deny incoming

sudo ufw default allow outgoingAllow SSH Access

Enable SSH access to manage your server:

⚠️NOTE: This command allows SSH through the UFW firewall from anywhere. If you want to only allow traffic from your IP address, skip this command and use the one below it.

# This command allows SSH connections from anywhere

sudo ufw allow sshIf you want to allow SSH only from a specific IP address for added security, use the command below after adding your IP address to it. Keep in mind that if your IP address changes you may not be able to get back in via SSH if you do it this way. However, most VPS providers will provide you with an alternate way to get to the console to make changes if need be. If you do not know what your IP address is you can easily find it HERE or HERE.

# This command allows you to only connect from you IP address.

# Replace <your-ip-address> with your actual IP address.

sudo ufw allow from <your-ip-address> to any port 22Replace <your-ip-address> with your actual IP address.

Allow Web Traffic

Enable HTTP (port 80) and HTTPS (port 443) traffic for web services:

sudo ufw allow 80

sudo ufw allow 443Enable UFW

Activate the firewall to enforce the rules:

sudo ufw enableVerify UFW Status

Check the current status and rules to ensure everything is configured correctly:

sudo ufw status verboseYou should see the rules you’ve added, along with the default policies.

Install Docker and Docker Compose

# Install dependencies

apt install apt-transport-https ca-certificates curl software-properties-common -y

# Add Docker's GPG key and repository

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

# Install Docker

apt update # Yes, run it again!

apt install docker-ce docker-ce-cli containerd.io -y

# Install Docker Compose plugin

apt install docker-compose-plugin -y

Enable Docker Service

# Ensure Docker starts on boot as the root user

systemctl enable docker

systemctl start dockerCreate a Non-Root User – I used “dockmin” in this build. Use what you like..

# Create a non-root user

adduser dockmin

usermod -aG docker dockminSwitch to the non-root user for all subsequent steps

# Switch to the non-root user

su - dockminDocker Container Setup –

All steps below this point should be performed as the non-root user we created above.

Create the Docker “Proxiable” network.

# Create the "proxiable" network

docker network create proxiableVerify the “proxiable” network has been created.

# Verify the network has been created

docker network lsCreate Project Structure

People have different ways that they like to arrange their docker containers on their servers. My personal preference is to create a docker folder under my non-root users home folder, and then create separate folders under the docker folder for each container/stack that I create. You do not have to do it this way. Its just a personal preference. But the instructions are written the way I do it.

# Create the the folder structure for our containers

cd ~

mkdir -p ~/docker/{nginx-proxy-manager,drawio,whoogle-search,portainer}Create Docker Compose file for Nginx Proxy Manager (NPM)

# Create the docker-compose.yml for NPM

nano ~/docker/nginx-proxy-manager/docker-compose.ymlAdd the following to the new file for NPM and save and exit the file.

services:

nginx-proxy-manager:

image: jc21/nginx-proxy-manager:latest

container_name: nginx-proxy-manager

restart: unless-stopped

ports:

- "80:80" # This port stays exposed by design

- "443:443" # This port stays exposed by design

- "81:81" # This port initially exposed; later restricted

volumes:

- ./data:/data

- ./letsencrypt:/etc/letsencrypt

networks:

- proxiable

networks:

proxiable:

external: trueStart the NGINX Proxy Manager Container

# Start Nginx Proxy Manager

cd ~/docker/nginx-proxy-manager

docker compose up -d⚠️ At this point, I recommend that you go ahead and login to NPM at http://<server-ip>:81 and change your password right away. The reason is that you now have the NPM management interface exposed to the internet and it has only the default credentials to protect it. (Later we will setup secure access and then change the password again. I would also recommend limiting access to this container using a CloudFlare tunnel or a VPN of some kind)

Create Docker Compose File for Drawio

nano ~/docker/drawio/docker-compose.ymlAdd the following to new the file for drawio and save and exit the file.

services:

drawio:

image: jgraph/drawio:latest

container_name: drawio

restart: unless-stopped

expose:

- "8080" # This should be the internal port for the container

networks:

- proxiable

networks:

proxiable:

external: true

Start the Draw.io Container

# Start draw.io

cd ~/docker/drawio

docker compose up -dCreate Docker Compose file for Whoogle

nano ~/docker/whoogle-search/docker-compose.ymlAdd the following to the new file for whoogle and save and exit the file.

services:

whoogle-search:

image: benbusby/whoogle-search:latest

container_name: whoogle-search

restart: unless-stopped

expose:

- "5000" # This should be the internal port for the container

networks:

- proxiable

networks:

proxiable:

external: true

Start the Whoolge container

cd ~/docker/whoogle-search

docker compose up -dCreate Docker Compose file for Portainer

nano ~/docker/portainer/docker-compose.ymlCreate Docker Compose file for Portainer

services:

portainer:

image: portainer/portainer-ce:latest

container_name: portainer

restart: unless-stopped

expose:

- "9000" # This should be the internal port for the container

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./data:/data

networks:

- proxiable

networks:

proxiable:

external: true

Start Portainer

cd ~/docker/portainer

docker compose up -dStop Portainer’s container (for now)

⚠️ The newly created Portainer container will expect you to login right away and change your password. However we cannot actually access the UI at the moment because the reverse proxy portion of its setup is not complete yet. As a result, Portainer will “time out” its initial login page for security reasons. Since we cannot do that right now, we might as well go ahead and stop the container until AFTER we have the Proxy Host and SSL cert setup for it.

cd ~/docker/portainer

docker compose stopAt this point the containers should all be up and running. So now we can begin the process of getting the CloudFlare API token needed for SSL retrieval and setting up NGINX Proxy Manager to retrieve those certs and serve up those web pages.

Obtaining SSL Certificates via CloudFlare

The DNS challenge method is used here because it allows for seamless domain validation without requiring any public-facing HTTP or HTTPS endpoints. By using the DNS challenge method, the SSL certificate is issued by your proving ownership of the domain through DNS records, making it both secure and straightforward. This is where you will need a CloudFlare account and have CloudFlare providing the Name Servers for your TLD (Top Level Domain). So if you do not have a CloudFlare Account setup and are using CloudFlare for your Name Servers for the domain, STOP here and go set that up. (It’s free) Then return and continue.

- Obtain a CloudFlare API token to manage the DNS for your domain:

- Log in to your Cloudflare dashboard.

- Click the little “person” icon in the upper right corner and then click on “My Profile”.

- Then on the left side of the page, click on API Tokens.

- Click the Create Token button on the right side of the page.

- Click the Use Temple button to the right of the “Edit Zone DNS” option.

- Click the the little pencil icon to the right “Token name: Edit zone DNS” and give the token a name to identify what it’s for. I like to use something like “Example.com – DNS ONLY”

- Under Permissions, set permissions as follows:

- Zone: DNS: Edit

- Under Resources, set the settings as follows

- Include: Specific Zone: the TLD domain you are working with (example.com)

- Scroll down and click “Continue to summary”.

- Click the “Create Token” button.

- On the next screen you will be given the new token. Before you do anything else, COPY and SAVE this token in a safe and secure place. You will need it later in these instructions and it will not be shown to you again! If you fail to do this, you will have to create a new token.

Configuring the NGINX Reverse Proxy Manager and obtaining SSL certs

Adding Proxy Hosts to NPM

- Login to NGINX Proxy Manager: Open your browser and got to

http://<server-ip>:81. - Create Proxy Hosts:

Go to HOSTS > PROXY HOSTS > ADD PROXY HOST and then add a new proxy host for each of our services using the information below. A little helpful info with this in NPM, after you fill in the domain name hit the TAB KEY to turn it into a “chip”.

- For NPM:

- Domain Names: Enter

npm.<your-domain.com> - Scheme: http

- Forward Hostname/IP: Enter

127.0.0.1 - Forward Port: Enter

81 - Block Common Exploits: Enable this option.

- Leave the SSL section empty for now (you will configure SSL in a later step).

- Click Save.

- Domain Names: Enter

- For Draw.io:

- Domain Names: Enter

drawio.<your-domain.com> - Scheme: http

- Forward Hostname/IP: Enter

drawio - Forward Port: Enter

8080 - Block Common Exploits: Enable this option.

- Leave the SSL section empty for now (you will configure SSL in a later step).

- Click Save.

- Domain Names: Enter

- For Whoogle:

- Domain Names: Enter

whoogle.<your-domain.com> - Scheme: http

- Forward Hostname/IP: Enter whoogle-search

- Forward Port: Enter

5000 - Enable Block Common Exploits.

- Leave SSL settings empty for now.

- Click Save.

- Domain Names: Enter

- For Portainer:

- Domain Names: Enter

portainer.<your-domain.com> - Scheme: http

- Forward Hostname/IP: Enter

portainer - Forward Port: Enter

9000 - Enable Block Common Exploits.

- Leave SSL settings empty for now.

- Click Save.

- Domain Names: Enter

- For NPM:

Obtain SSL Certificates in NPM

- Access SSL Certificates Section:

- Navigate to the SSL Certificates tab in the NPM web UI.

- Click Add SSL Certificate.

- Select Let’s Encrypt.

- Generate Certificates:

- Domain Names: Enter the FQDN for your domain (e.g.,

drawio.<your-domain.com>for drawio).

and HIT THE TAB KEY. - Email Address for Let’s Encrypt: Enter a valid email address. (I have never been spammed by them FWIW)

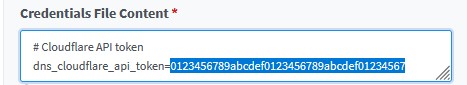

- Toggle: Use DNS Challenge to ON.

- DNS Provider: Select Cloudflare.

- API Token: Paste in the Cloudflare API token (you created earlier) and overwrite the example token only which is the long string to the right of the “=”. Leave everything else in this field alone.

- Toggle the “I agree to the Let’s Encrypt Terms of Service” to ON.

- Click Save to issue the certificate.

- After a while, you should see the new SSL cert populate into the list of SSL Certificates.

- Domain Names: Enter the FQDN for your domain (e.g.,

Repeat the above steps to issue SSL certificates for each of the other domains (including npm);

whoogle.<your-domain.com> , portainer.<your-domain.com> and npm.<your-domain.com>

3.3 Configuring SSL on Proxy Hosts

- Edit Proxy Hosts:

- Still within NPM, go to HOSTS > PROXY HOSTS .

- For each domain, click the three vertical dots next on the right side and click Edit for each proxy host (e.g., Npm, Draw.io, Whoogle, Portainer).

- Enable SSL:

- In the SSL Tab, select the SSL certificate for the corresponding domain you are working on.

- Enable the following options:

- Force SSL: Redirects all HTTP traffic to HTTPS.

- HTTP/2 Support: Allows faster, multiplexed connections.

- HSTS: Instructs browsers to connect only via HTTPS for added security.

- Click SAVE.

- NOTE: For Portainer Only – Enable WebSockets and Add Advanced Directives : Portainer’s UI relies on WebSocket connections for real-time updates and dynamic interactions. Without these directives in place, the proxy may block or fail to properly route WebSocket traffic, resulting in issues like missing UI elements or unresponsive features.

- Edit the Proxy Host for Portainer and toggle “WebSockets Support” to ON.

- Under the Advanced tab, add the following to the Custom Nginx Configuration field and click save.

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

📝Explanation of SSL Options:

- HTTP/2 Support: Improves page load speed by allowing multiplexing of requests over a single connection. Use this unless you are dealing with legacy clients.

- HSTS (HTTP Strict Transport Security): Instructs browsers to only connect over HTTPS. Enable this unless you expect clients that might access the site using HTTP.

⚠️ Now that we have the SSL cert installed and active for Portainer, this would be a good opportunity to complete its setup by starting up its container, connecting to its web UI, and logging in for the first time and changing our password. To do this we need to first start the container up. Back in your SSH connection to the server, do the following.

cd ~/docker/portainer

docker compose up -d⚠️⚠️ NOTE: Once the container is started, open your browser back up and go to https://portainer.<your-tld-domain> and you should be greeted with an initial Portainer Login screen where you can setup the initial admin user. This must be done within 5 minutes of starting the container! The screen will show a default user of “admin” and allow you to set a password for this user. I recommend change the user name from “admin” to something else and then setting a strong password. If you started the container and missed the 5 minute window to setup this use, just restart the container again with “docker restart portainer” command in the terminal and try again.

Verification

- Test each domain in your browser (e.g.,

https://drawio.<your-domain.com>). - Verify that:

- The site loads over HTTPS with a valid SSL certificate.

- HTTP traffic is redirected to HTTPS.

- Use a tool like

nmaporcurlto ensure no direct access is available to the container ports (8080,5000,9000) from outside theproxiablenetwork.

If everything is working and you have confirmed that you can reach each of the sites via HTTPS at their FQDN and without port numbers. Then, you are done. Well, almost. We still need to lock down the webUI to Nginx Proxy Manager a little better now. This is very easy to do. We just need to alter the docker-compose.yml file for NGINX Proxy Manager to no longer use the externally mapped port of 81.

SSH into the server again and connect as your dockmin user. and open the docker-compose.yml file for NPM with the following commands.

# Edit the docker-compose.yml for NPM

nano ~/docker/nginx-proxy-manager/docker-compose.ymlModify the “81:81” to be “127.0.0.1:81:81” as shown below. This will have the affect of only allowing access to the external port 81 from the container itself and not from the internet.

services:

nginx-proxy-manager:

image: jc21/nginx-proxy-manager:latest

container_name: nginx-proxy-manager

restart: unless-stopped

ports:

- "80:80" # This port stays exposed by design

- "443:443" # This port stays exposed by design

- "127.0.0.1:81:81" # Post install change

volumes:

- ./data:/data

- ./letsencrypt:/etc/letsencrypt

networks:

- proxiable

networks:

proxiable:

external: trueOnce you have made the needed change, save and close the docker-compose.yml file. Then restart the container with:

cd ~/docker/nginx-proxy-manager

docker compose stop && docker compose up -dNow, you want to go and verify that you can no longer access the NPM web UI by pointing your browser to http://<server-ip>:81 – If things are working as intended, you should no longer be able to access the NPM web UI. However you should still be able to access the NPM web UI at https://npm.<your-domain.com>

NOTE: If you are still able to access NPM at http://<server-ip>:81 it could be some cached information in your browser playing tricks on you. To test this, either close all your browsers and try again, or simply open a new PRIVATE and try it again. If it is still accessible, retrace your steps and make sure you didn’t miss anything.

The setup is now complete…

TL;DR

If you’re already familiar with Docker, reverse proxies, and container setups but are tackling the challenge of running Docker on an exposed VPS like I was, here’s the basics of what we’re doing differently and why it works.

The Problem (revisited)

By default, Docker modifies iptables to expose container ports directly to the host network (in this case – the host network is the internet). This bypasses UFW and leaves services like admin dashboards and other internal services completely exposed and vulnerable. Relying solely on UFW for security gives a false sense of safety, as ufw status won’t reflect Docker’s modifications.

Our Solution

- Create a custom

proxiableNetwork:- All containers are attached to a shared, isolated Docker network named

proxiable. - Instead of using the

portsdirective to bind container ports to the host, we use theexposedirective indocker-compose.ymlfiles and expose the INTERNAL port for the container. This limits port exposure to the internalproxiablenetwork only, thereby preventing Docker from modifyingiptablesto expose ports externally.

- All containers are attached to a shared, isolated Docker network named

- Nginx Proxy Manager (or your favorite reverse proxy):

- Acts as the gateway, routing external traffic from subdomains to specific containers.

- Provides automated SSL / HTTPS for secure connections using Let’s Encrypt certificates.

- Securing NPM Access:

- Recommended: Once setup, access to port 81 should be blocked or restricted and accessed using Cloudflare tunnels or a VPN etc…. (This is a standard consideration for any install of NPM and is not just limited to this setup).

- Alternative: An internal proxy host with SSL is created for NPM within NPM (just like the other containers) could be setup and used to secure the admin interface to NPM. (This is the method I use in this tutorial as it is the simplest)

Why It Works

- Stops UFW Bypass: By not binding container ports to the host network, Docker doesn’t modify

iptables. - Centralized Proxy: NPM handles all external traffic, ensuring only necessary ports (80/443) are exposed.

- Enhanced Security: Containers remain isolated, with access controlled through NPM and the

proxiablenetwork. - Scalable and Flexible: Adding new containers or domains requires minimal changes.

Caveat to our method

Internal ports within the proxiable network must remain unique and cannot use the same port. (e.g., two containers cannot both expose port 8080). There is however, a simple work around to this. If you already have one container using the needed port for another container, you could simply create a second shared and isolated network (e.g., proxiable2 and so on) for the new container and then just make sure that NPM is also attached to that network as well.

Thanks for sticking with me to the end! I hope this guide has provided you with some valuable information to help keep your docker containers safe on the web. If you found this helpful, feel free to share it with others who might benefit!

– Byte Maverick